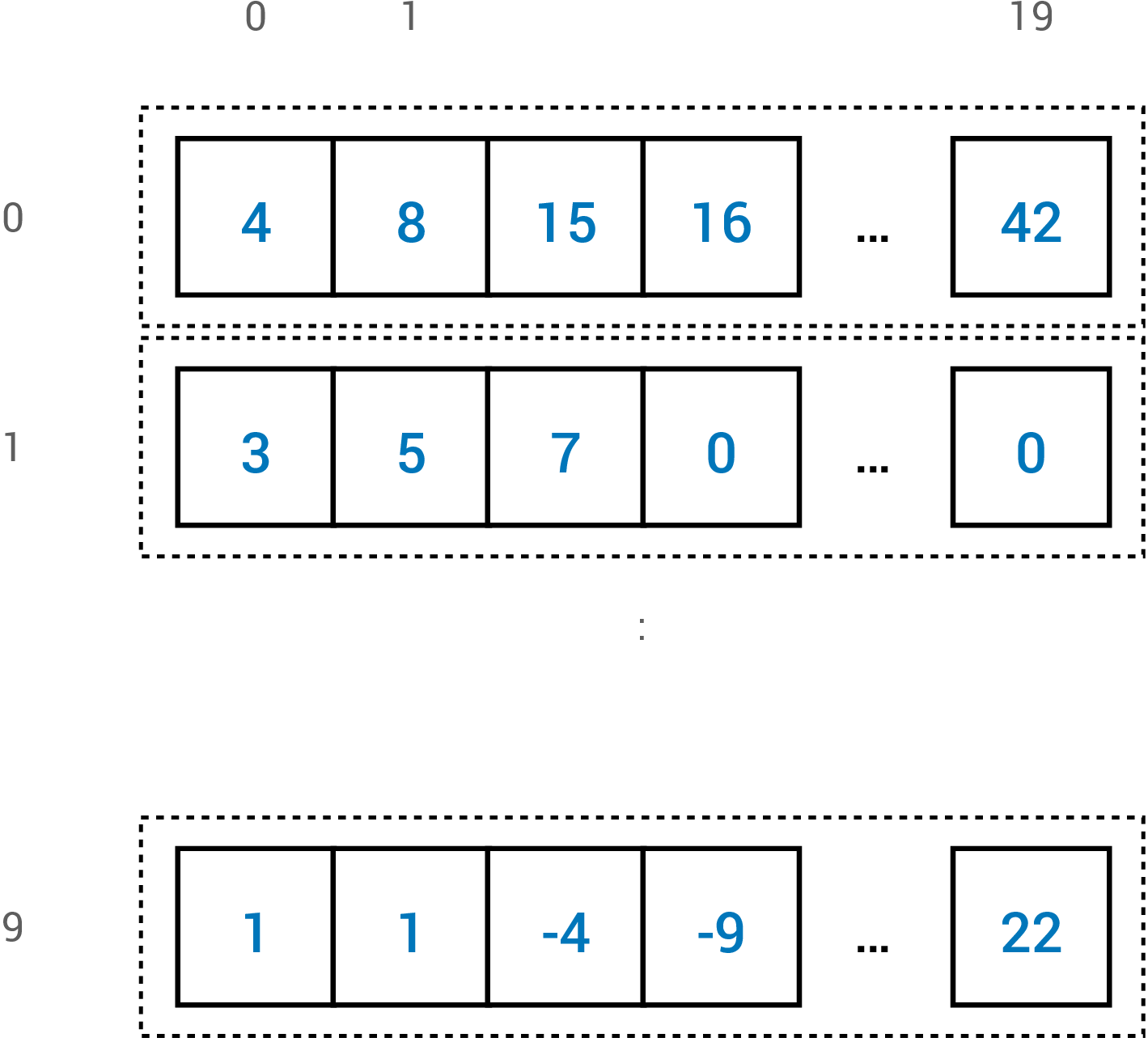

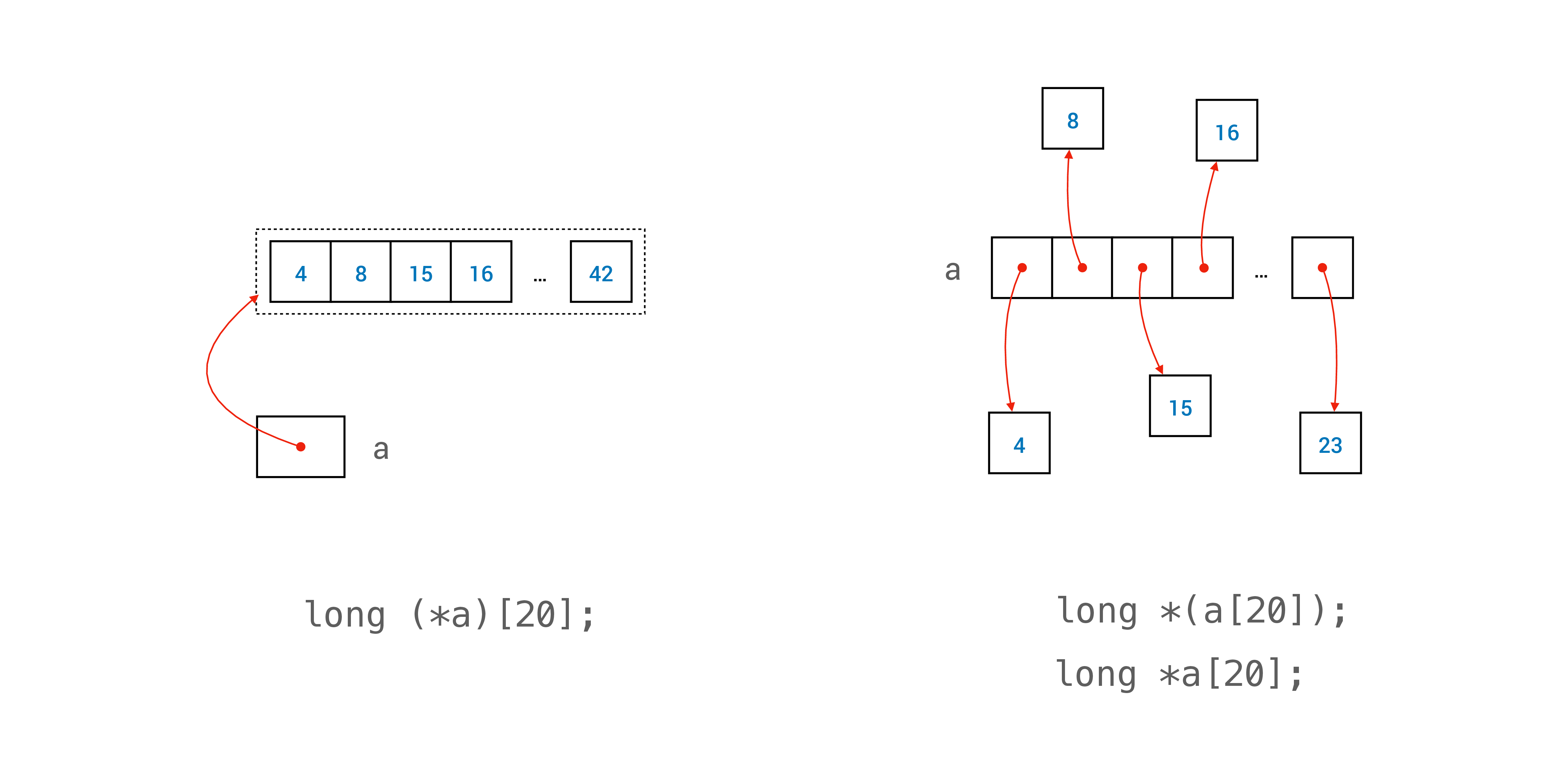

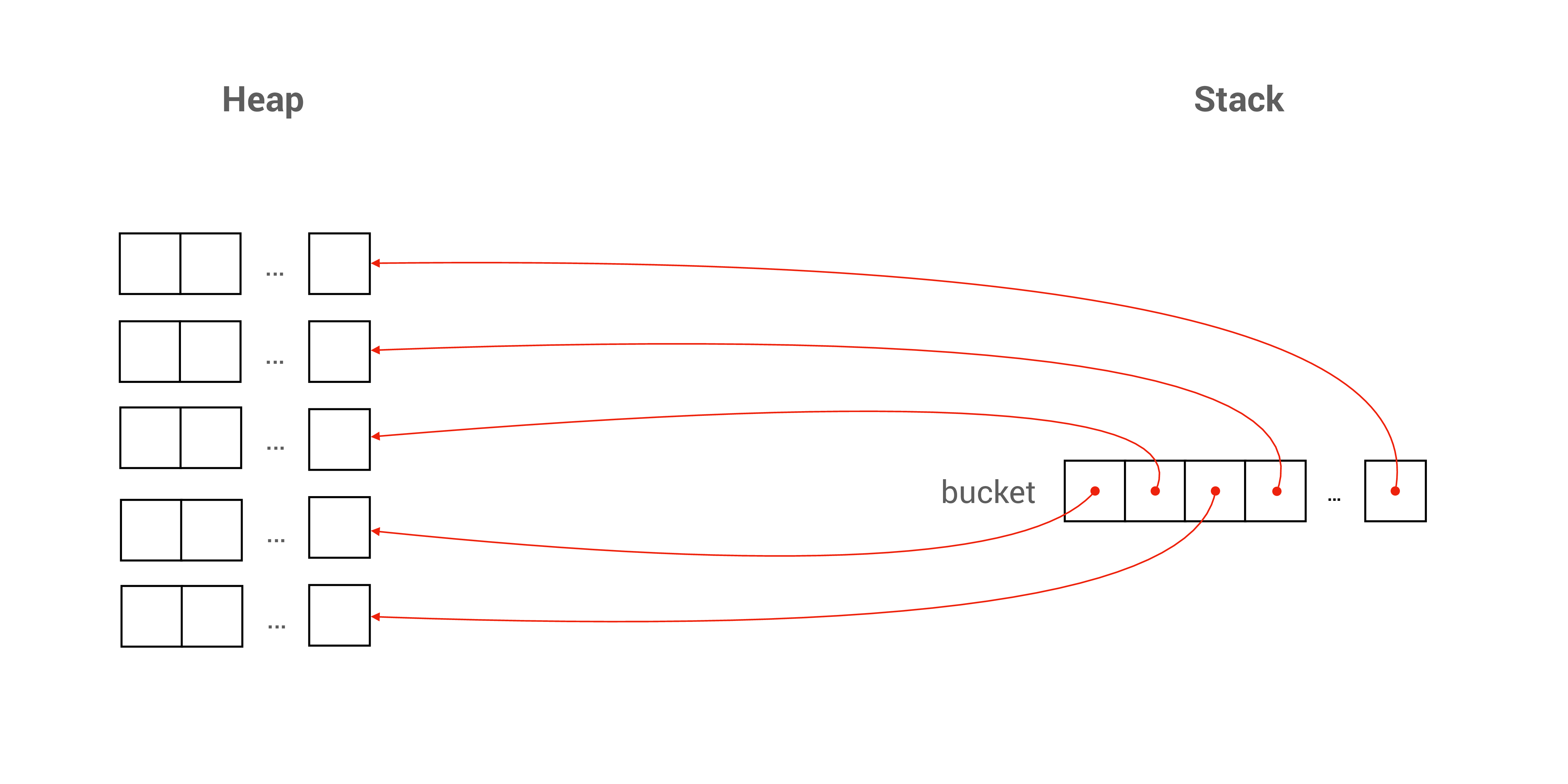

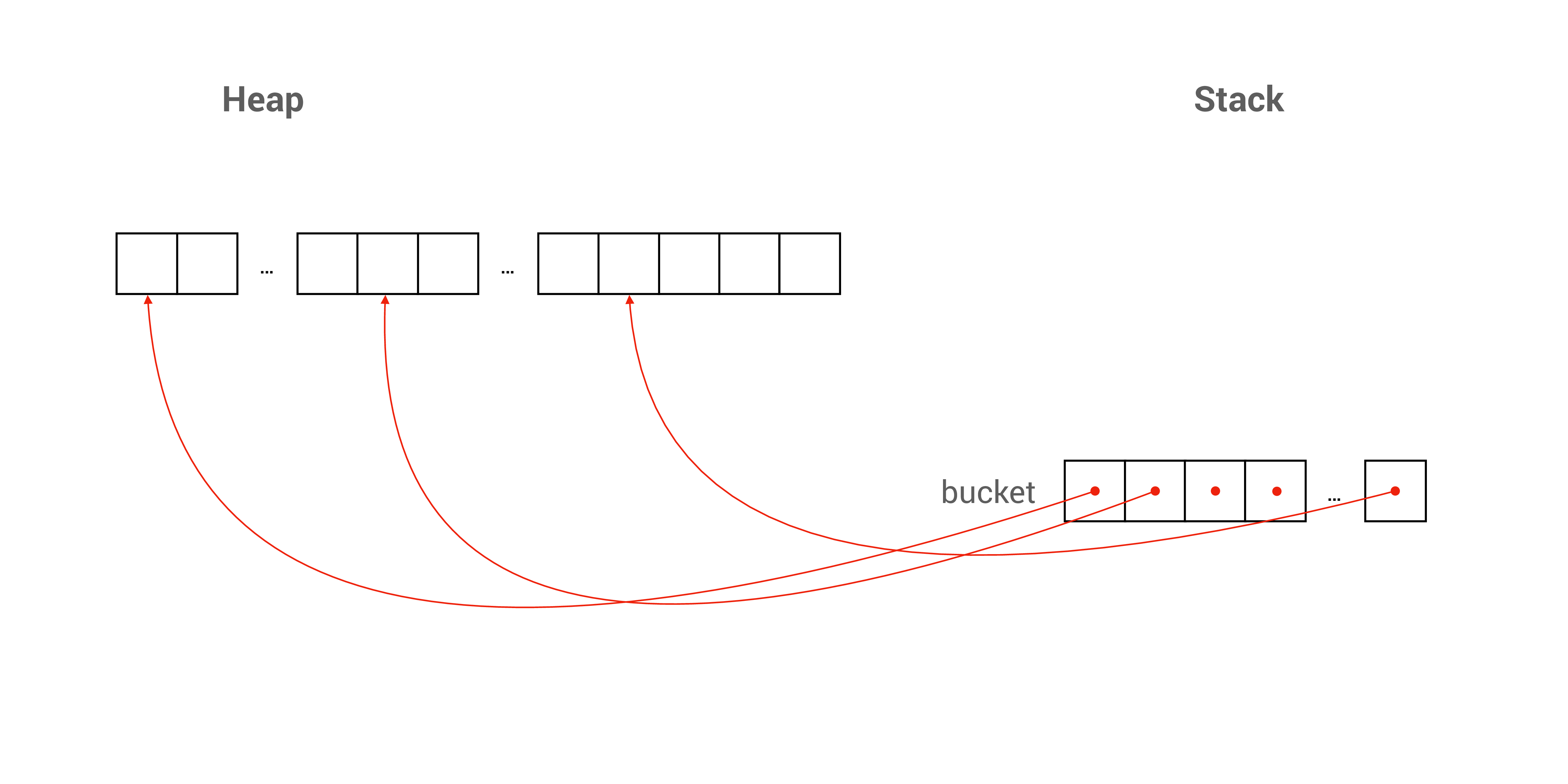

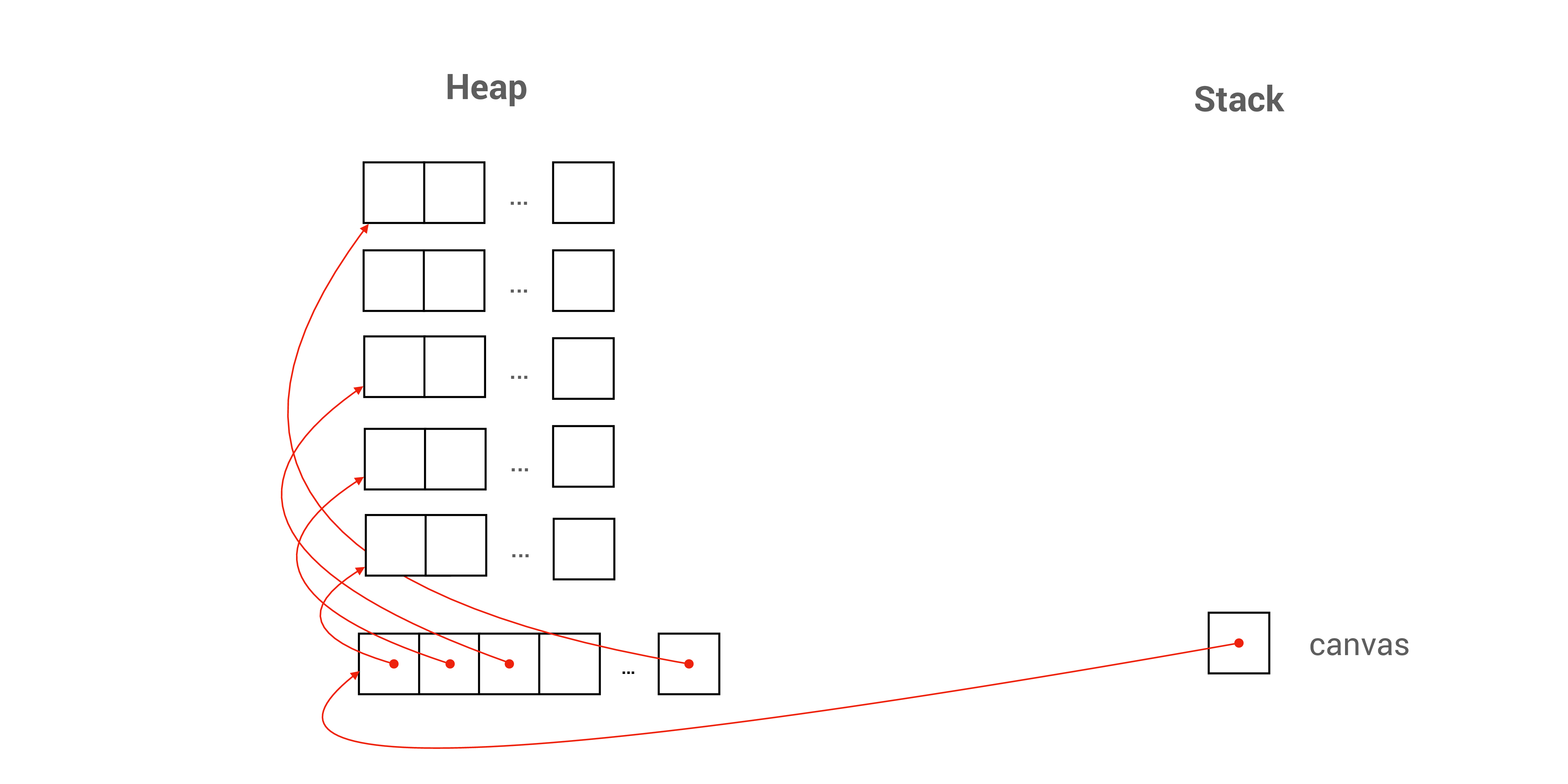

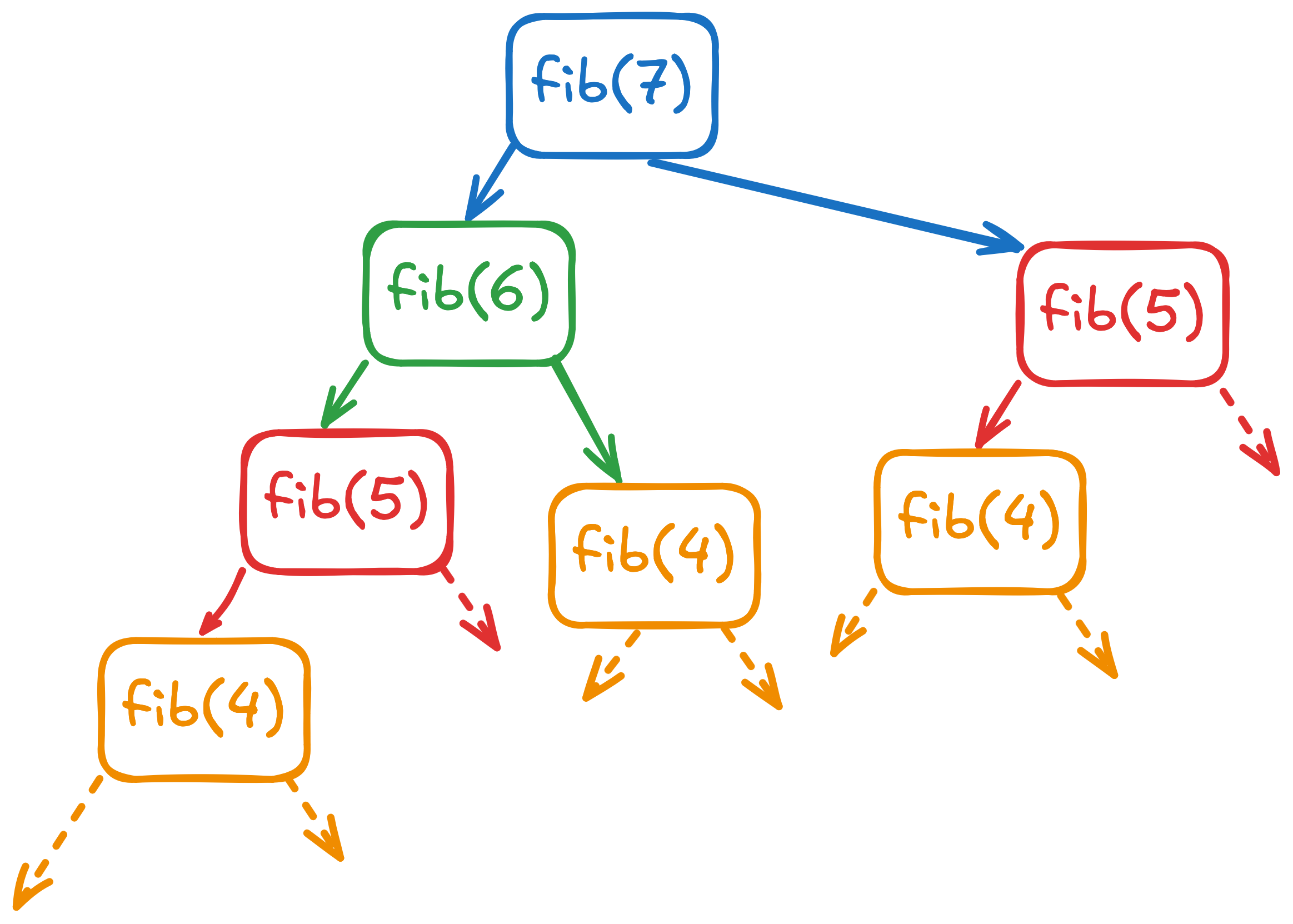

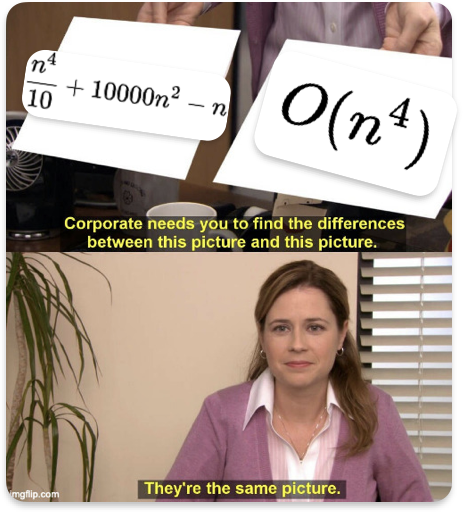

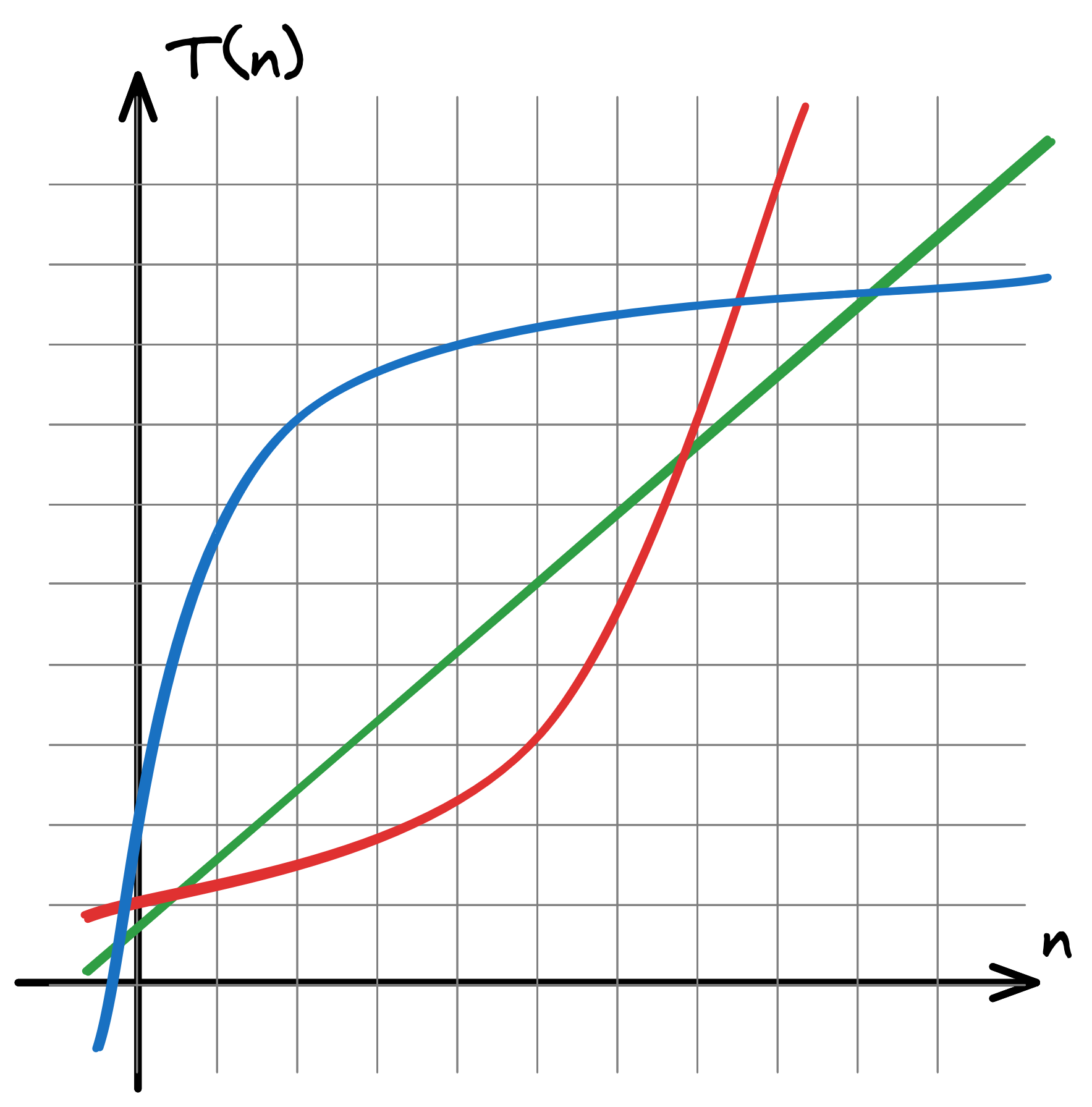

class: middle, center # Lecture 8 ### 14 October 2024 .smaller[ **Admin Matters**<br> Unit 19: **Multi-D Arrays**<br> Unit 20: **Efficiency**<br> ] --- ### PE1 - When: 22 October 2024, 6 - 9 PM - What: Unit 1 to Unit 18 (1D Array) - File a ticket by Friday if you need alternative arrangements --- class: middle,center ## Multi-dimensional Arrays --- .small[ ```C long matrix[10][20]; : matrix[i][j] = matrix[i][j] + 4; ``` ] .w30[] --- `matrix[i]` decays to `&matrix[i][0]` `&matrix[i][0]` is a pointer to a `long`. --- `matrix` decays to `&matrix[0]` `&matrix[0]` is a pointer to an array of 20 `long` values. --- class: top ### Declare a Pointer to an Array ```C long (*a)[20]; long b[20]; a = &b; ``` --- ### Passing a 2D array into a function: .tiny[ ```C void foo(size_t nrows, size_t ncols, long matrix[10][20]) { .. } void foo(size_t nrows, size_t ncols, long matrix[][20]) { .. } void foo(size_t nrows, size_t ncols, long (*matrix)[20]) { .. } ``` ] --- ### Pointer to Array vs. Array of Pointers .fit80[] --- ### A Fixed Array of Dynamic Arrays .smaller[ ```C double *buckets[10]; size_t num_of_cols = cs1010_read_size_t(); for (long i = 0; i < 10; i += 1) { buckets[i] = calloc(num_of_cols, sizeof(double)); // check for errors } : for (long i = 0; i < 10; i += 1) { free(buckets[i]); } ``` ] --- ### A Fixed Array of Dynamic Arrays .fit80[] --- ### A Fixed Array of Dynamic Arrays .smaller[ ```C double *buckets[10]; size_t num_of_cols = cs1010_read_size_t(); buckets[0] = calloc(num_of_cols * 10, sizeof(double)); // handle error for (size_t i = 1; i < 10; i += 1) { buckets[i] = buckets[i - 1] + num_of_cols; } : free(buckets[0]); ``` ] --- ### A Fixed Array of Dynamic Arrays .fit80[] --- ### A Dynamic Array of Dynamic Arrays .tiny[ ```C double **canvas; size_t num_of_rows = cs1010_read_size_t(); size_t num_of_cols = cs1010_read_size_t(); canvas = calloc(num_of_rows, sizeof(double *)); // handle error here for (size_t i = 0; i < num_of_rows; i += 1) { canvas[i] = calloc(num_of_cols, sizeof(double)); // handle error } : for (long i = 0; i < 10; i += 1) { free(canvas[i]); } free(canvas); ``` ] --- ### A Dynamic Array of Dynamic Arrays .fit80[] --- ### A Dynamic Array of Dynamic Arrays .tiny[ ```C double **canvas; size_t num_of_rows = cs1010_read_size_t(); size_t num_of_cols = cs1010_read_size_t(); canvas = calloc(num_of_rows, sizeof(double *)); // handle error canvas[0] = calloc(num_of_cols * num_of_rows, sizeof(double)); // handle error : free(canvas[0]); free(canvas); ``` ] --- ### Jagged Array .smaller[ ```C double *half_square[10]; for (size_t i = 0; i < 10; i += 1) { half_square[i] = calloc(i+1, sizeof(double)); // handle error } ``` ] --- class: middle, center # Efficiency --- class: middle, center ## 1. No Redundant Work --- .smaller[ ```C bool is_prime(long n) { bool is_prime = true; for (long i = 2; i <= n - 1; i += 1) { if (n % i == 0) { is_prime = false; } } return is_prime; } ``` ] -- Observation 1: if the moment we know $n$ is divisible by $i$ we can return false earlier. --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i <= n - 1; i += 1) { if (n % i == 0) { return false; // line changed here } } return true; // line changed here } ``` ] -- .small[ Maybe we could write this instead. Does this code always run with fewer iterations? ] -- No. Because if $n$ is prime, we still iterate until the end. --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i <= n - 1; i += 1) { if (n % i == 0) { return false; // line changed here } } return true; // line changed here } ``` ] So the number of iterations depends on the input, and there are still inputs that cause this program to use the maximum amount of iterations (around $n$ iterations). --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i <= n - 1; i += 1) { if (n % i == 0) { return false; // line changed here } } return true; // line changed here } ``` ] A better optimisation is one that works __for all__ possible inputs. -- Can we find such an optimisation here? --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i <= n - 1; i += 1) { if (n % i == 0) { return false; // line changed here } } return true; // line changed here } ``` ] Answer: We know that if $n = a \times b$, then at least one of $a$ or $b$ is at most $\sqrt{n}$. -- So if $n$ is not prime, it has a divisor $i$ where $1 < i \leq \sqrt{n}$. --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i*i <= n; i++) { if (n % i == 0) { return false; } } return true; } ``` ] So we can stop our iterations at $\sqrt{n}$. -- Importantly, notice here that it does not matter if $n$ is prime or not. In either case, we can stop our iterations at $\sqrt{n}$. --- - Return `false` as soon as we know $n$ is not a prime - Not checking for divisor that is redundant --- class: middle, center ## 2. No Duplication --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; (double) i <= sqrt(n); i += 1) { if (n % i == 0) { return false; } } return true; } ``` ] --- .smaller[ ```C bool is_prime(long n) { double limit = sqrt(n); for (long i = 2; i <= limit; i += 1) { if (n % i == 0) { return false; } } return true; } ``` ] --- .tiny[ ```C long find_max(const long list[], long start, long end){ if (start >= end - 1){ return list[start]; } long mid = (start + end) / 2; if (find_max(list, start, mid) >= find_max(list, mid, end)){ return find_max(list, start, mid); } return find_max(list, mid, end); } ``` ] --- .tiny[ ```C long find_max(const long list[], long start, long end){ if (start >= end - 1){ return list[start]; } long mid = (start + end) / 2; long left = find_max(list, start, mid); long right = find_max(list, mid, end); if (left >= right) { return left; } return right; } ``` ] --- .smaller[ ```C long fib(long n) { if (n == 1 || n == 2) { return 1; } long first = 1; long second = 1; long third = 2; for (long i = 2; i <= n; i += 1) { first = second; second = third; third = first + second; } return third; } ``` ] --- .smaller[ ```C long fib(long n) { if (n == 1 || n == 2) { return 1; } return fib(n-1) + fib(n-2); } ``` ] --- class: top,center .fit[] --- class:top,center how many times is `fib()` called? --- how many steps (in terms on $n$)? .moretiny[ ```C long fib(long n) { if (n == 1 || n == 2) { return 1; } long first = 1; long second = 1; long third = 2; for (long i = 2; i != n; i += 1) { first = second; second = third; third = first + second; } return third; } ``` ] --- class:center, middle ## The Big-O model of analysis --- How do we try to predict how well our programs will perform? -- We could look at every operation, figure out how much it costs, and manually count. -- But operation costs changes depending on machines. A multiplication on Intel/AMD hardware might cost different amount of time compared on to ARM hardware. --- class:left, top For an "initial guage" of how well a program performs, we probably don't need to go into the nitty gritty. -- Counting exact the number operations is tricky, cumbersome, and complicated. -- But what we really care about is **how the number of operations scales as $n$ gets larger and larger**. -- Put another way, let $T(n)$ be the time taken for the program to solve a problem of time $n$, -- we care about the behaviour of $T(n)$, as $n \to \infty$. --- class:center E.g. when given $T(n) = \frac{n^4}{10} + 10000n^2 - n$, we want to say, $T(n)$ is "on the order of $n^4$". -- $$O\left(\frac{n^4}{10} + 10000n^2 - n\right) = O(n^4)$$ We drop terms with lower rate of growth and multiplicative constant. --- class:center .fit[] --- class:center #### Which of these runtimes scales better with $n$? .fit[] --- ### Comparing Rate of Growth $f(n)$ grows faster than $g(n)$ if we can find constants $n_0$ and $c$ such that $f(n) > cg(n)$ for all $n \ge n_0$. --- class: top, center $f(n) = n^n$ vs. $g(n) = 3^n$ -- 1. $f(1) = 1$ ; $g(1) = 3$ -- 2. $f(2) = 4$ ; $g(2) = 9$ -- 3. $f(3) = 27$ ; $g(3) = 27$ -- 4. $f(4) = 256$ ; $g(4) = 81$ -- 5. $f(4) = 3125$ ; $g(5) = 243$ --- class: top, center $f(n) = n^n$ vs. $g(n) = 3^n$ .fit[] --- class: top, center $f(n) = n^n$ vs. $g(n) = 3^n$ * $f(n)$ after some point dominates $g(n)$. -- * So in this example: $g(n) = O(f(n))$. --- ### Running Time / Time Complexity - A notion of how efficient an algorithm is - In CS1010, we are interested in the _worst case_ running time - Should be expressed in simplest big-O notation with tightest bound --- .small[ ```C long foo(long x) { long y = x * 10; return y; } ``` ] -- .small[ There are 2 lines, each line has some **fixed number** of operations that do not depend on the input.] -- .small[ Let's say there are 4 operations in total in total, (could be 6 could be 10, doesn't matter) we will say `foo` takes $O(4) = O(1)$ time. $O(1)$ time means constant time.] --- .tiny[ ```C size_t max(size_t n, const long list[]) { long max_so_far = list[0]; size_t max_index = 0; for (size_t i = 1; i <= n; i += 1) { if (list[i] > max_so_far) { max_so_far = list[i]; max_index = i; } } return max_index; } ``` ] -- .small[ * The loop body takes $O(1)$ time. ] -- .small[ * There are $O(n)$ loop iterations. ] -- .small[ * In total: $O(1) \times O(n) = O(n)$ time. ] --- .tiny[ ```C void draw_line(long m, char* first, char* mid, char* last) { cs1010_print_string(first); for (int j = 0; j < m - 2; j += 1) { cs1010_print_string(mid); } cs1010_println_string(last); } void draw_rectangle(long m, long n) { draw_line(m, TOP_LEFT, HORIZONTAL, TOP_RIGHT); for (int i = 0; i < n - 2; i += 1) { draw_line(m, VERTICAL, " ", VERTICAL); } draw_line(m, BOTTOM_LEFT, HORIZONTAL, BOTTOM_RIGHT); } ``` ] -- .tiny[ * `cs1010_print_string` takes $O(m)$ time. ] -- .tiny[ * The inner loop takes takes $O(m)$ time. ] -- .tiny[ * There are $O(n)$ iterations. ] -- .tiny[ * Total time $O(2m) + O(nm) = O(nm)$ time. ] --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i <= n - 1; i++) { if (n % i == 0) { return false; } } return true; } ``` ] -- * `is_prime` takes $O(n)$ time. --- .smaller[ ```C bool is_prime(long n) { for (long i = 2; i * i <= n; i++) { if (n % i == 0) { return false; } } return true; } ``` ] -- * `is_prime` takes $O(\sqrt{n})$ time. --- .smaller[ ```C void to_upper(char *s) { for (long i = 0; i < length_of(s); i++) { if (s[i] >= 'a' && a [i] <= 'z') { s[i] = s[i] - 'a' + 'A'; } } } ``` ] --- .tiny[ ```C long find_max(const long list[], long start, long end){ if (start >= end - 1){ return list[start]; } long mid = (start + end) / 2; long left = find_max(list, start, mid); long right = find_max(list, mid, end); if (left >= right) { return left; } return right; } ``` ] $ T(n) = 2T(n/2) + 1 $ -- $ T(1) = 1 $ --- ### Solving $T(n)$ $T(n) = 2 T(n/2) + 1$ -- $T(n) = 2 (2 T(n/ 4) + 1) + 1$ -- $T(n) = 4 T(n/ 4) + 2 + 1$ -- $T(n) = 4 (2 T(n/ 8) + 1) + 2 + 1$ -- $T(n) = 8 T(n/ 8) + 4 + 2 + 1$ --- ### Solving $T(n)$ $T(n) = 2 T(n/2) + 1$ $T(n) = 4 T(n/ 4) + 2 + 1$ $T(n) = 8 T(n/ 8) + 4 + 2 + 1$ --- ### Solving $T(n)$ $T(n) = 2^i T(n/2^i) + \sum_{j = 0}^{i - 1} 2^j$ -- But when do we stop expanding? -- When we hit the base case, $n = 1$. $T(1) = 1$. -- $T(n/2^i) = T(1)$, when $i = \log(n)$. (Base 2) --- ### Solving $T(n)$ $T(n) = 2^i T(n/2^i) + \sum_{j = 0}^{i - 1} 2^j$ with $i = \log(n)$ -- $T(n) = 2^{\log(n)} T(1) + \sum_{j = 0}^{\log(n) - 1} 2^j$ -- $T(n) = n \times T(1) + \sum_{j = 0}^{\log(n) - 1} 2^j$ --- ### Solving $T(n)$ $T(n) = n \times T(1) + \sum_{j = 0}^{\log(n) - 1} 2^j$ -- Using GP: $$ \sum_{j = 0}^{\log(n) - 1} 2^j = \frac{2^{\log(n)} - 1}{2 - 1} = n - 1 = O(n) $$ --- ### Solving $T(n)$ $T(n) = n \times T(1) + n - 1$ -- $T(n) = n \times 1 + n - 1 = O(n)$ --- ### Finding Fibonacci Numbers .tiny[ ```C long fib(long n) { if (n == 1 || n == 2) { return 1; } return fib(n-1) + fib(n-2); } ``` ] --- class:top ### Simple bound for Fibonacci: $T(n) = T(n - 1) + T(n - 2) + 1$ $T(1) = T(2) = 1$ -- $T(n) \leq T(n - 1) + T(n - 1) + 1$ -- $T(n) \leq 2T(n-1) + 1$ --- class:top ### Simple bound for Fibonacci: $T(n) \leq 2T(n-1) + 1$ -- $T(n) \leq 2(2T(n-2) + 1) + 1$ -- $T(n) \leq 4T(n-2) + 2 + 1$ -- $T(n) \leq 4(2T(n-3) + 1) + 2 + 1$ -- $T(n) \leq 8T(n-3) + 4 + 2 + 1$ --- class:top ### Simple bound for Fibonacci: $T(n) \leq 2^i T(n-i) + \sum_{j = 0}^{i - 1} 2^j$ -- $T(n) \leq 2^{n - 1} T(1) + \sum_{j = 0}^{n - 2} 2^j$ -- $T(n) \leq 2^{n - 1} \times 1 + \sum_{j = 0}^{n - 2} 2^j$ -- $T(n) \leq 2^{n - 1} + \sum_{j = 0}^{n - 2} 2^j$ --- class:top ### Simple bound for Fibonacci: $T(n) \leq 2^i T(n-i) + \sum_{j = 0}^{i - 1} 2^j$ $T(n) \leq 2^{n - 1} T(1) + \sum_{j = 0}^{n - 2} 2^j$ $T(n) \leq 2^{n - 1} \times 1 + \sum_{j = 0}^{n - 2} 2^j$ $T(n) \leq \sum_{j = 0}^{n - 1} 2^j$ --- class:top ### Simple bound for Fibonacci: $T(n) \leq 2^i T(n-i) + \sum_{j = 0}^{i - 1} 2^j$ $T(n) \leq 2^{n - 1} T(1) + \sum_{j = 0}^{n - 2} 2^j$ $T(n) \leq 2^{n - 1} \times 1 + \sum_{j = 0}^{n - 2} 2^j$ $T(n) \leq \sum_{j = 0}^{n - 1} 2^j = \frac{2^n - 1}{2 - 1} = 2^n - 1 = O(2^n)$ --- ### Run-time analysis is not always trivial .tiny[ ```C long count_num_of_steps(long n) { long num_of_steps = 0; while (n != 1) { n = collatz(n); num_of_steps += 1; } return num_of_steps; } ``` ] --- ### Homework - Quiz 8 - Exercise 5 - Problem Set 20 --- class: bottom .tiny[ ]